Where's the undo button? (Part I)

This is the first part of the blog series where we examine the relationship between DevOps and safety. My name is Tuomo Niemelä and I work as a DevOps consultant at Polar Squad which operates in the intersection of people and technology.

Read the second part of the blog from here, and the third part from here.

The reason

I need to confess. When I first started my DevOps journey from a more traditional developer role, I did it so that developer colleagues wouldn't have to do operations related stuff. Of course I was also interested in all the technology and systems which go hand in hand with DevOps. And honestly it’s also rewarding to be able to help someone at some occasions. Anyway, I wanted to “take the hit” for my future teammates. In retrospect this is perhaps kind of silly. There’s only so little time and so many systems to improve. Maybe these so called “hits” are meant to take in together as a team after all. In order to really help someone somewhere, these texts and their perspectives could be more powerful than any singular system improvement. DevOps represents first and foremost safety: psychological and technological. Let me elaborate further in the oncoming chapters.

“DevOps is a mindset that belongs to everyone. For me, DevOps represents first and foremost safety: psychological and technological. Safe failures enable rapid learning, development, and innovation. If you take care of people, the people take care of the rest: People matter the most.”

Some might argue that safety can sometimes be at odds with the principles of speed and agility that are central to DevOps. In order to move quickly and respond to changing customer needs and market conditions, organizations may need to take risks and push the boundaries of what is possible. This can sometimes mean cutting corners or skipping safety measures in order to move faster, which can lead to accidents or errors. But this leads to the following: if failures are an inevitable part of any complex system, an organization must be healthy enough - safe enough - so that accidents or errors are tolerated. Maybe I end this chapter simply by citing the Navy SEAL mantra: “Slow is Smooth, Smooth is Fast”.

Technological safety

Back in the day I felt anxiety running new commands and scripts on my PC. One wrong command and everything goes to hell. After discovering virtual machines and containers, things have become more pleasant. I do not have to trash my own workstation while trying out new things. Backups are also nice. Now that I’m older and find myself running and maintaining systems which impact possibly hundreds of thousands of users. I still found myself asking from time to time: “What can I change, what can I try, without destroying the whole system?”. Unknown dependencies are lurking everywhere. And if one is really unlucky there’s no proper documentation or anyone to ask about anything.

“Would it be nice if there was an undo button on everything?”

Safe failures enable rapid learning, development, and innovation. So in the spirit of “The Third Way: Culture of Continual Experimentation and Learning” by Gene Kim we should try to develop the environment where creating failures becomes as safe as possible. I know there are some people who enormously enjoy the pressure and close call situations. My goal here is to list some technical practices and solutions which could help to create more safe and welcoming development and production environments for every member - and personality type - of the development team.

Backups and Data Replication: First on the list and for a reason. Do you have important data? If yes, then take backups - automated or manual. Should there be continuous data replication to another location also? Is there a chance that the master data could get corrupted and spread from there? Think about it before than later.

Dry-run Modes: Enables simulations without affecting live systems. Basically a switch for a command or script to run it through by only showing what it would do if executed normally.

Version Control Systems: Allows changes to be tracked and, if necessary, rolled back. Information about “who did”, “what did”, “when did”, and “why did” makes rolling back much easier.

Idempotency: This means that operations can be run multiple times without differing outcomes, reducing fear about process repetition.

Smaller Implementations: Breaking tasks into smaller pieces reduces what can go wrong at once. Smaller blast radius equals less stress.

Separate Test and Production Environments: Allows changes to be tried without impacting the live system. Enormous safety benefit but comes usually with added cost. But what is the cost of the possible disaster in production?

Continuous Feedback Systems: Includes automated test, integration, or deployment results fed back to the team. Reduces the fear of late problem discovery.

Immutable Infrastructure: Components are replaced rather than changed. Ensures consistent configurations and reduces fear of unpredictable systems.

Automated Security Scanning: Integrated into the CI/CD pipeline to give teams immediate feedback about potential security issues. This proactive approach enhances safety by allowing teams to address vulnerabilities before they become critical, fostering a more secure development environment.

Feature Flags/Toggles: They allow safe testing and gradual rollout of new features having smaller blast radius and faster rollbacks. This reduces anxiety associated with releases.

Blue-Green Deployments: Old and new deployments exist at the same time and we promote the other as the actual. Allows safe deployment and quick reversion, providing a safety net during deployment.

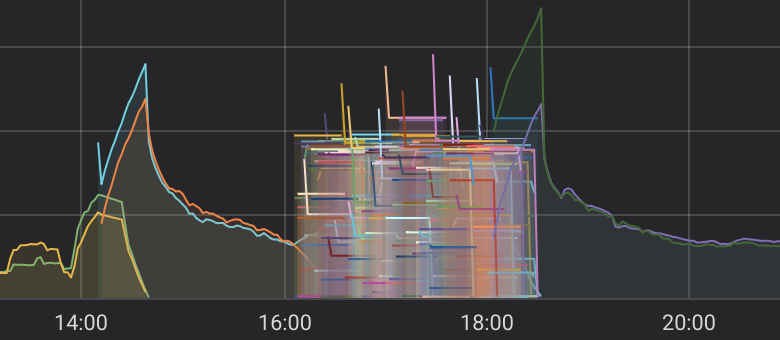

Automated Rollbacks: This process leverages anomaly detection to revert changes automatically, reassuring teams that their mistakes won't have long-lasting effects.

Fault Tolerance and Auto-healing Systems: These systems are designed to enhance reliability by automatically detecting and mitigating certain failures, thus alleviating the need for urgent manual interventions. A straightforward example is a setup where a faulty server component is automatically replaced by a standby component, ensuring uninterrupted service.

Proactive Monitoring and Alerting: By identifying issues early on, this strategy ensures system safety and allows teams to address problems calmly and methodically, avoiding panic-driven responses and promoting a secure, reliable operational environment.

Principle of Least Privilege: Actors in the system (such as people, processes, and services) are granted the minimal access level necessary to complete their tasks. This minimizes the potential for unintentional mistakes or misuse of systems, thereby ensuring both technological and psychological safety.

Guard Rails: These are automated safeguards designed to avert major errors by enforcing predefined boundaries in system operations. While I mentioned the "Principle of Least Privilege" earlier, here are a couple more examples:

API Rate Limiting: Instituting a cap on the number of API calls permitted in a specific timeframe to maintain system stability and prevent abuse.

Limiting Cloud Resource Usage: Implementing restrictions on the consumption of cloud resources to manage operational costs and prevent resource exhaustion.

Runbooks and Clear Communication Channels: Step-by-step guides for handling operations, including clear communication channels for emergencies, ensuring safety even for less experienced team members.

Chaos Engineering: Controlled induction of failure helps build resilience and fosters a culture of learning without blaming. I would probably save this for later when there’s no actual “fires” left.

Simplicity: This one goes to the more philosophical side of things but I wanted to end this list with it. While all these technological bells and whistles are nice, it is good to keep in mind that keeping things as simple as possible could be beneficial: even from a safety perspective.

I’m sure the previous list is far from complete. One could regard any deterministic automation, at least a tested one, as an enabler of safety alone. Anyhow, architecturally, our aim is to eliminate single points of failure in the system. Many of these listed methods aim to do just that, directly or indirectly. But what is the greatest single point of failure? A person operating solo with little or no communication or collaboration. Whether it is someone giving orders in the power-hierarchy or a grassroots level engineer doing "little" tweaks to the system. This leads us to the next part of the series. Stay tuned!